A standalone, CAT-agnostic service to prepare your Translation Memory (TM) for production. We use specialized TMX cleaning to remove noise, fix encoding issues, and filter segments for MT training and CAT workflows.

A standalone service that works directly with TMX exports. No specific CAT tool required.

Engineered specifically for MT training, Quality Estimation (MTQE), and CAT workflows.

Rule-based filters combined with language models to remove noise without losing data.

When your Translation Memory needs cleaning

Benefits of Translation Memory Cleaning

Cleaner TM data leads to more accurate MT training and fine-tuning results.

Removing linguistic noise improves the reliability of MT evaluation and QE outcomes.

Automated filtering keeps harmful or mistranslated segments out of your training data.

Consistent logic allows large TMX volumes to be processed quickly without manual rules.

Systematic identification of problematic segments reduces data risks and production errors.

Curated data ensures more predictable MT behavior and better control over your pipelines

70-80%

of incorrect segments are usually identified during a routine scan & remove check

40+

quality check filters

including neuron-based ones

Who Our Translation Memory Cleaning Is For

• Preparing TM for MT training or evaluation.

• Reducing noise that impacts MT quality.

• Improving TM reuse and consistency across products.

• Improving TM quality to increase reuse rates.

• Optimizing internal workflows.

• Delivering cleaner data to enterprise clients.

• Using Translation Memory as training data.

• Reducing the risk of training MT models on low-quality segments.

• Improving evaluation reliability by cleaning input data.

Translation Memories often accumulate years of formatting junk and “tag soup.” Instead of a basic search-and-replace, we target the specific patterns that break MT models and slow down linguists. This is where our filter library comes in. By running your data through the specialized filters, we isolate and remove the technical noise that standard tools miss, leaving you with a clean, high-performance dataset.

Cleaning shouldn’t mean losing your history. Our approach is designed to keep your high-quality segments intact while stripping away the encoding errors and “junk” that accumulated over years of exports.

Standard tools are great for translating, but they aren’t built to find “glued words,” hidden Unicode noise, or broken length ratios. We identify the elements that usually only shows up when your MT starts behaving strangely.

Most TMs were made for human reuse. We prepare them for the “AI era”—ensuring the data is clean enough for model training, evaluation, and automated quality estimation.

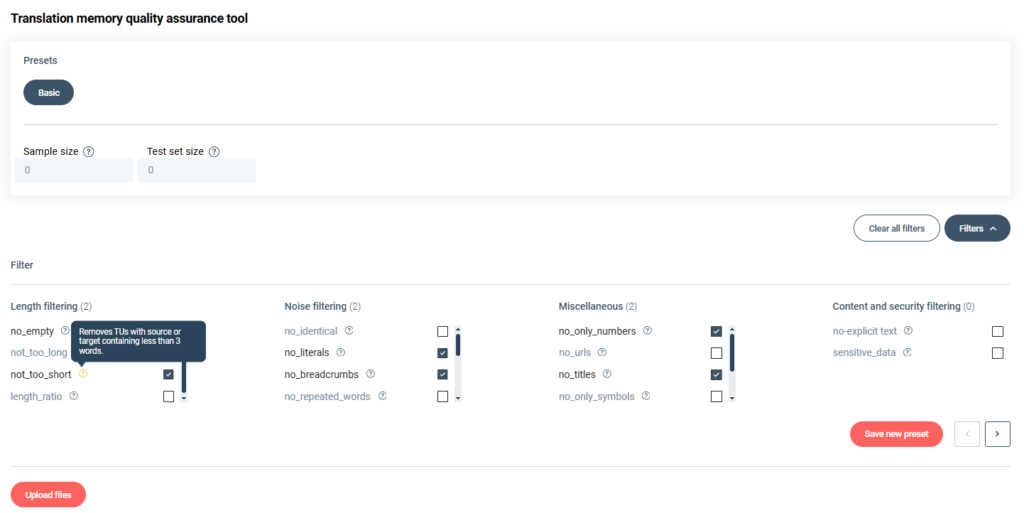

Length Filtering

no_empty

not_too_long

not_too_short

length_ratio

Noise Filtering

no_identical

no_literals

no_breadcrumbs

no_unicode_noise

no_bad_encoding

no_repeated_words

no_space_noise

no_paren

no_escaped_unicode

no_wrong_language

Miscellaneous

no_urls

no_titles

no_only_symbols

no_script_

inconsistencies_sl

no_script_

inconsistencies_tl

Quality & Neural

inc_transl_sl

no_only_numbers

no_empty

inc_transl_tl

no_number_

inconsistencies

dedup

no_glued_words

Neural Filters

Optional

To start, just send over your TMX files. We handle everything from single files to large batches. We clean your data based on your specific requirements by combining standard rules with language model technology to filter out unusable segments. You can also choose premium semantic checks via OpenAI or Azure. If you have high-priority content, we can include a human linguist to review and fix complex segments. This process keeps your translations safe while making sure the final TMX is ready for MT training or production.

Starting at 20–30 EUR per million characters

Custom requirements or high-volume discounts available upon request

Book a Call