The GenAI in Localization Conference, hosted by Custom.MT in December 2025, explored how new technology is transforming translation and multilingual workflows. Far from replacing translators, these tools have made localization specialists more important than ever. This article summarizes the main topics from the conference, and full Day 1 recordings are available online for anyone who wants to watch the sessions.

Quick Highlights From the Conference

- The keynote (Linas Beliūnas) explained what happens now that the AI hype is fading: companies need clear ROI, safer workflows, and human oversight.

- Google’s Ashish Chauhan showed how agentic AI is changing translation workflows and why agents are different from old MT pipelines.

- Marina Pantcheva (RWS) demonstrated how to “humanize” AI text with vivid examples of unnatural AI language and practical editing strategies.

- Konstantin Dranch shared new benchmark results on quality estimation systems and what they mean for reliability.

- Kelly Marchisio (Cohere) explained how multilingual LLMs are really trained and what teams must consider before building or fine-tuning their own.

- WeShape AI panel offered real stories on spotting “rogue AI projects,” redirecting misaligned initiatives, and how women in localization are shaping responsible AI.

- AWS fireside chat with Tanay Chowdhury explored how enterprises actually build AI systems and what agentic workflows will look like in the next 1–2 years.

- Eric Silberstein closed with a creative keynote on translation, transformers, and why AI inspired him to write futuristic fiction.

Bottom line: localization teams are now co-owning AI strategy. They are the bridge between LLMs, product teams, and real customer expectations.

Opening: The Industry Is Moving Fast, and Localization Is Leading It

Bryan Murphy

The conference opened with a reality check from Bryan Murphy.

He shared a striking number: 84% of all translated words at Smartling are now produced by AI, and almost half of that volume goes live with no human review.

For Bryan, this scale is only possible when you treat translation as manufacturing:

automate everything you can, embed QA into every step, and take hallucination detection very seriously.

Talking about hallucinations is important because, as he says, Google now translates nearly a trillion words every month with AI, and many companies want to translate far more than ever before. So if any global brand decides to translate 10,000,000 words with AI, 1% will hallucinate (which is inevitable). That’s 100,000 words — a huge number — and it will bring significant reputational risks.

What does it all mean to localization teams?

Instead of replacing localization specialists, AI has created much more responsible and diverse work. Localization teams now need to be at the forefront of AI adoption, understanding the risks and being able to explain and measure the business impact.

Keynote: The Morning After the Hype

Three years after ChatGPT arrived, we’re now in the “trough of disillusionment.”

The hype is fading, most AI pilots don’t show real ROI, and many “AI-first” initiatives (like aggressive automation at Klarna) are now being quietly recalibrated, not celebrated.

Linas Beliūnas, a fintech expert, writer, and former country manager at Revolut, outlined three “brutal truths” about LLMs at scale:

- they’re inconsistent and hallucinate,

- they don’t know your business unless you design for that,

- and at enterprise scale they’re often slower and more expensive than expected.

The companies succeeding now aren’t trying to replace humans. And they’re not ignoring AI either. They are building disciplined hybrid systems, where domain expertise, AI literacy, and business judgment work together.

Linas says that localization teams should stop being defensive and building rogue experiments. Instead, they should:

- centralize AI experimentation,

- design AI + human workflows,

- and build an AI roadmap tied to business outcomes, not to buzzwords.

The real fortunes, he argued, will be made now—in this unglamorous phase where disciplined teams turn AI from a cost-cutting tool into a growth engine and competitive moat.

Google Session: The Latest in Agentic AI Research

Ashish Chauhan, Google — moderated by Olga Beregovaya

Ashish Chauhan, AI Customer Engineer at Google Cloud, started with an idea that agentic is a junior employee who can finally follow instructions.

Ashish says that he sees a shift: after the era of model training and RAG, we’re entering the decade of agents — systems that both generate answers and take actions.

He explained that today

- models are strong, but not autonomous;

- agentic tools exist, but they break;

- workflows work best when humans remain in control.

The main change is that Google Gemini now supports full agentic pipelines that connect the model, the knowledge base, the orchestration layer, and real-world integrations.

Why does this matter for localization?

A lot of localization tasks are repetitive and rule-based, like what you’d give to a junior assistant: creating jobs, uploading source files, checking completeness, running basic QA, and passing unusual cases to a senior specialist.

Agentic AI can do that assistant work for you. And this is exactly why localization is well positioned for implementing agentic AI. The processes are already structured, predictable, and mature. Adding agentic AI will simply remove friction and give teams more time.

Slop to Pop: How to Humanize AI Language

Marina Pantcheva leads Linguistic AI Services at RWS and spends her days analyzing how GenAI writes. She also clearly understands how humans can make that writing sound real. Marina promised: after this talk, you’ll never look at AI-generated text the same way again.

How can you tell that a text was written by AI?

It often looks technically correct, but conceptually completely wrong.

For example, you may ask it to describe a tiger lily and get a text with striped petals, striped leaves, tiger tails, and even claws.

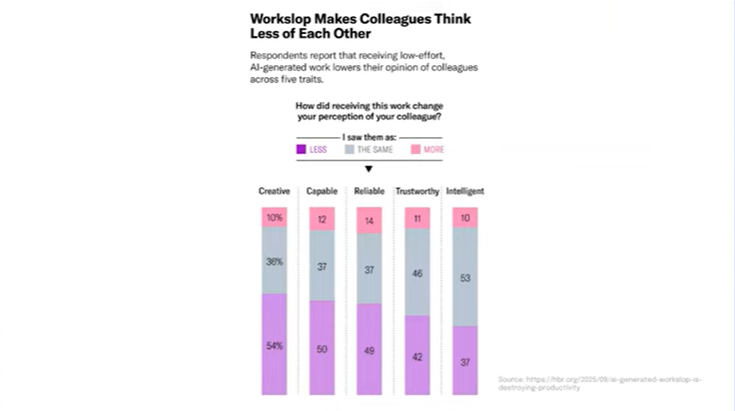

Why does it matter to spot AI slop?

People can recognize AI-generated texts, and they don’t like them. Marina cited Stanford research showing that when readers sense AI-generated language, they judge the writer as less creative, capable, and even intelligent. So, if your text “smells like AI,” people quietly downgrade you.

Marina divided the AI signs into three big categories:

- Words: buzzwords like elevate, leverage, resonate, seamless, robust.

- Structure: predictable rhythms, one-word hooks (“The outcome?”), rules of three, “It’s not X, it’s Y.”

- Meaning: fake “from…to…” ranges and metaphors that sound deep but say nothing.

Marina’s main advice is:

- Don’t use AI as the writer. Use it as a writing assistant to brainstorm, collect ideas, and draft.

- First, decide exactly what you want to say before you prompt, then ask AI to help express it.

- Tell AI to avoid buzzwords, clichés, over-dramatic metaphors, rule-of-three, and “It’s not X, it’s Y” structures.

- Give AI your style to imitate. AI is built as a great imitator, so add a piece of your work as an example so it can figure out the brand tone, style rules, and key terminology.

- Always human-edit and fact-check. Remove AI “tells,” fix style and rhythm, and check facts (no “tiger tails” on tiger lilies).

Quality Estimation Benchmark Results

Konstantin’s presentation focused on the following question: can we actually trust today’s QE tools?

First: what is QE?

QE (Quality Estimation) is an AI system that tries to predict the quality of a translation without human reference.

It can label a segment as:

- good enough to publish,

- needs light editing, or

- needs full review.

When QE works, it helps teams save time and money by routing content automatically.

But it also comes with risks. If you ask one model to judge another, errors can slip through. This is why QE has existed for years but still isn’t widely operationalized. Some systems fail on rare languages, creative content, or complex domains.

The benchmark

To understand how reliable these systems really are, Custom.MT ran a large community benchmark:

- 8,000 sentences from 16 organizations

- 6 QE systems tested (OpenAI, Claude, and industry QE models)

- Human judgments compared against machine scores

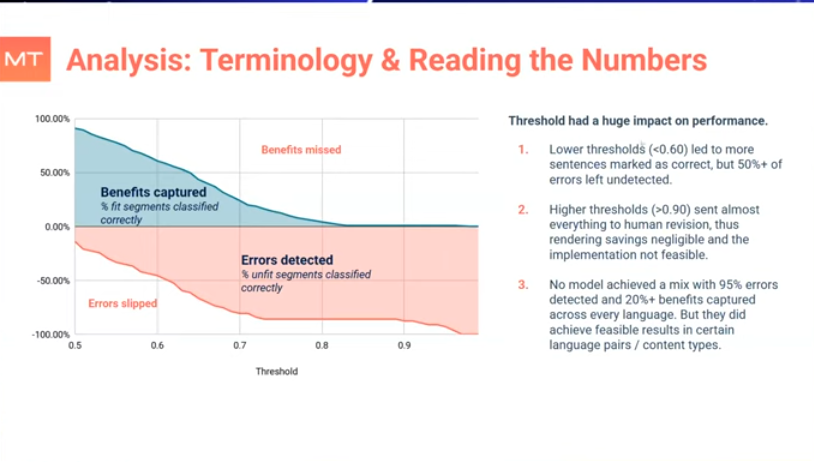

The results showed that thresholds matter more than people think.

- Set the bar too low, and errors go live.

- Set it too high, and you lose all efficiency gains.

Optimizing the threshold is what unlocks real value.

Some systems caught errors earlier and delivered more savings; others only worked well in narrow conditions. Claude performed strongly. But it comes with no enterprise support, whereas industry QE tools were more stable but slightly less powerful.

Conclusion all localization specialists need to know:

Linguists still outperform machines when judging each other’s work, so QE should not replace humans.

Konstantin’s recommendations:

- Use QE to support automation, not replace linguists.

- Always perform periodic spot-checking of QE decisions.

- Combine QE with metadata (content type, locale, customer tier) to make smarter routing.

Building Multilingual LLMs: How to Train Your Model

How are multilingual LLMs actually trained? And why should companies think twice before building their own? These are the questions Kelly Marchisio raised in her presentation, and they are familiar to many companies.

A lot of teams believe that they need the “largest” or “smartest” model to get high-quality output. They blame hallucinations on model weakness.

But a bigger model is not always better.

Models perform better when they are provided with the right context.

Many enterprise LLM problems come from missing domain knowledge.

Models can perform extremely well if they understand terminology, product details, and examples from the company.

Long, complicated prompts often make results worse, not better.

“Training your own model sounds exciting. Maintaining it is the hard part.”

Kelly gave the following examples of success stories, so people could understand what good context can lead to:

- A retail company reduced hallucinations by simply adding real product descriptions to the prompt.

- A support team doubled accuracy by giving the model three examples of approved answers.

Kelly offered the following solutions:

- Invest in context, not just tools.

- Create internal LLM guidelines the same way you create style guides.

- Use short prompts with clear steps.

Panel: Rogue AI in the Localization Wild — Spot It, Fix It, Own It

Jennifer Vela Valido (Spotify), Marta Nieto (Preply), and Conchita Laguardia (Farfetch), moderated by María Jesús de Arriba Díaz

Hosted by WeShape AI (Women in Localization)

The panel brought together Jennifer Vela Valido, Marta Nieto, and Conchita Laguardia, moderated by María Jesús de Arriba Díaz.

All three work at the crossroads of localization and AI inside enterprise companies, and they know firsthand what happens when AI projects are built in silos. Jennifer, Marta, and Conchita discussed how quickly things break once a model that “works in English” is pushed to every market without involving localization.

The speakers shared real moments when internal AI projects went wrong:

- Case 1. A product team built an AI feature that worked fine in English but never tested it in other languages, and localization only discovered it after users abroad reported broken or offensive content.

- Case 2. Leadership told teams to “use AI” without defining goals or guardrails. Teams rushed into pilots, expected magic, and ended up with tools that sounded impressive but didn’t actually solve real problems.

- Case 3. Excited engineers once rolled out a translation pipeline that looked great in English. But because no one checked other locales, it produced inconsistent terminology, mixed regional variants, and confusing UX. All of them landed on the localization team’s desk after launch.

What Jennifer, Marta, and Conchita see happening is:

Rogue AI usually starts the same way: AI projects are built in silos, usually by product or engineering, tested only in English, then pushed to all markets without involving localization.

Red flags:

- “We just used one prompt for all languages.”

- “We know it works in English, so it’ll work everywhere.”

- Applying AI where a simple rule or script would be safer.

They recommend to:

- Bring localization in early, instead of just asking for a final “approval.”

- Back your arguments with data: failures, quality problems, and risks in other languages.

- Frame localization as multilingual AI expertise, not as “just translations.”

- Create cross-functional squads — linguists, engineers, data, and safety — that collaborate from day one.

“Localization has the experience to challenge hype and redirect AI projects before they cause damage.”

The panel also highlighted the important role women play in building bridges between technical teams, leadership, and users. Their focus on communication and responsible design keeps AI grounded in reality.

AWS Fireside Chat: What Agentic AI Looks Like Inside Enterprises

Tanay Chowdhury, AWS GenAI — moderated by Olga Beregovaya

What do you think of when talking about agentic AI in an enterprise company? An AI-powered robot making decisions instead of human beings?

Tanay Chowdhury, AWS GenAI lead, explained how this works in real companies. Agentic AIs are fully automated production workflows, and here are some examples:

- Recommendation engines update the moment you refresh a page. If you watch two cooking videos, the system immediately tries new suggestions and sees whether you click.

- Push notifications learn when you usually open your phone. If you ignore messages at 4 p.m. but open them at 7 p.m., the system shifts on its own.

- Shopping patterns differ by country. In some regions, long page views mean interest; in others, they signal hesitation. The AI has to learn these differences, not assume everything works like the U.S.

Tanay says none of this works without good data. “Agentic” behavior only appears when the system has enough signals — clicks, purchases, time spent on a page — to understand what users actually do.

What does it mean for localization specialists?

User behavior is not universal.

If AI assumes everyone behaves like U.S. users, recommendations, search, and notifications will fail in other markets.

This gives localization teams a new role: teaching AI how people behave in different regions.

Closing Keynote: Translation, Transformers, and Fiction

Eric Silberstein closed the day with a story that began in 1997, when he helped Microsoft build the first Chinese proofing tools. Back then, he learned how messy languages really are and how important human judgment is.

He says that localization experts already know how to work with content in many forms, which puts them in a strong position in this new era. We already work across languages, systems, and cultures. We know how to evaluate texts even when we can’t speak the language ourselves.

Eric ended by talking about his second career as a novelist. Fiction, he said, works a lot like modern language models: you follow a thread, build on every previous line, and see where the story goes.

It was a light and inspiring end to a day full of technical insights and practical lessons.

Final Thoughts

The conference confirmed that localization is not stepping aside for GenAI. Instead, it is becoming a central part of AI strategy.

GenAI was expected to simplify workflows. But in practice, it’s creating a new layer of responsibility for localization specialists.

It’s an interesting twist: the more powerful GenAI becomes, the more essential the people who guide it become too. And for now, this balance between automation and human review is what actually makes progress possible.

Get the recording of the Gen AI in Localization conference

Complete this form to get the full conference recording.

Frequently Asked Questions

The short answer is no. While AI handles a massive volume of work—Smartling reported that 84% of their translated words are now AI-based, human expertise is more vital than ever. Leaders should view these tools as an “amplifier” for existing staff rather than a replacement. Humans are needed to act as the “intelligence” that decides which workflows are safe for machines and which require deep cultural judgment.

“Rogue AI” occurs when engineering or product teams build tools in a silo, testing only in English before pushing them to every market. To fix this, enterprises must centralize their experimentation and create “multilingual AI squads”. By bringing localization experts in early, you can catch broken assumptions or offensive content before it reaches your customers

This is where “Agentic AI” comes in. Think of it as a digital junior employee that can follow instructions and take action rather than just writing text. In an enterprise AI setup, these agents can handle chores like creating jobs, uploading source files, checking for completeness, and running basic quality checks. This frees up your senior team to focus on strategy and high-stakes content.

Hallucinations are inevitable; if you translate 10 million words, even a 1% error rate results in 100,000 wrong words. To mitigate this risk, you must move away from “magic” and toward a manufacturing mindset. This involves building automated quality gates, using specific prompt templates, and ensuring “hallucination detection” is embedded into the production process

This is known as “AI slop”—content that is technically correct but conceptually weird. Machines often use predictable rhythms and over-rely on buzzwords like navigate, delve, or profound. Research shows that when customers “smell” AI, they view the brand as less intelligent and less creative. To fix this, use the AI as a writing assistant to brainstorm ideas, but always have a human do the final edit to ensure it sounds real

Not necessarily. A bigger model isn’t always a better model. Often, the problem isn’t the machine’s “brain” but a lack of context. LLMs perform much better when they are given specific domain knowledge, product details, and examples of your brand’s past work. Investing in better data and clear internal guidelines is often more effective than simply paying for a larger tool

Quality Estimation (QE or MTQE) tools can predict if a translation is “fit for publication,” which helps save time and money. However, these systems aren’t perfect and can fail on rare languages or creative text. The best approach is to use QE to support automation but keep human linguists in the loop for periodic spot-checks and to set the “quality thresholds”. You can explore threshold optimization here: https://custom.mt/join-qe-benchmarking/

By using AI to make translation faster and less expensive, you can suddenly afford to localize content that was previously out of reach, like technical docs or training materials. This allows the business to sell into more countries and improve customer satisfaction. The goal is to move from a conversation about “spending” to a conversation about “enabling growth” in new markets.

The best way to keep your finger on the pulse of this fast-moving industry is to sign up for our newsletter. By subscribing, you will receive the latest industry news, updates on future events, and the most recent research on LLMs and translation tech. You can also explore our past and future webinars here https://custom.mt/events-2/.