Close to 200 people attended the Custom.MT workshop on AI prompt engineering in localization on April 18-19. The event spanned 7 hours and covered a wide array of material. Participants created 535 prompts and executed generation tasks a total of 38,947 times. The models used in the exercises included OpenAI GPT-4 Turbo, Anthropic Claude 3, and Mistral 7B, among others.

In 2024, one year into the GPT boom, language teams strive to implement generative AI better and more effectively. Prompts are becoming increasingly elaborate, with engineers and project managers employing various techniques to reduce hallucinations, enhance accuracy, and lower costs.

Here is a quick recap of the key points.

1. Live examples of GenAI industrialization by language teams

More than 40% of the participants indicated that generative AI helps their organizations’ localization workflows. Five presenters provided an overview of their work.

– Terminology extraction for translation (Marta Castello, Creative Words)

– Generating product descriptions in multiple languages for eCommerce (Lionel Rowe, Clearly Local)

– Translation + RAG glossaries (Silvio Picinini, eBay)

– Copy generation and GenAI hub in a gaming company (Bartlomiej Piatkiewicz, Ten Square Games)

– Video voiceover and summarization of descriptions in eLearning (Mirko Plitt, WHO Academy)

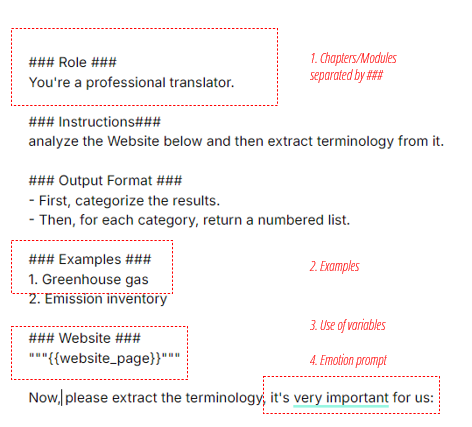

2. Recommended prompt structure

The first practical task involved building a terminology extraction prompt using best practices:

– Separating prompts into ### Sections ### to make them modular

– Variables – to be able to use the prompt again and again with new content

– Minimizing the instructions

– Using examples

– Adding emotional markers to improve the output potentially

– Using temperature 0 to have reproducible results

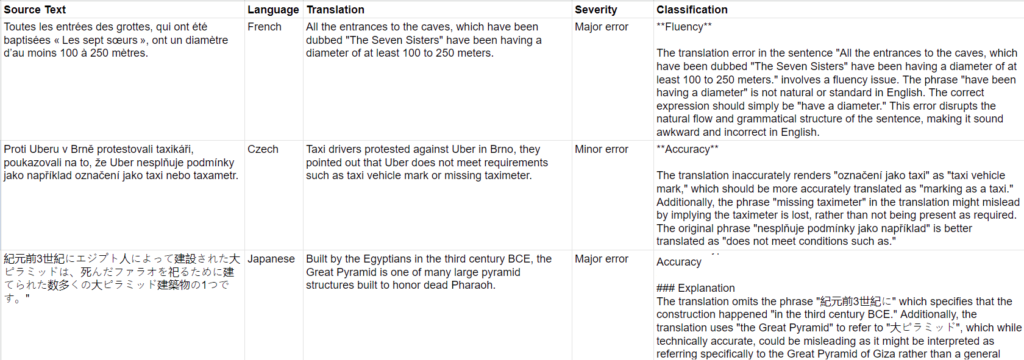

3. Spreadsheet integration for LQA

The second task was to integrate prompts with spreadsheets to unlock working with variables across multiple lines of content. Participants created their own language quality assurance bots by asking LLMs to detect and label translation errors. We used the public DEMETR dataset from the WMT competitions as task material.

4. Chain of Thought prompts

Giving an AI model too many instructions, for example, a 20-line localization style guide leads to many instructions being skipped. Instead, in this exercise the participants split the prompt into a chain of smaller prompts or provided instructions to the model to work on step by step.

In our spreadsheet exercise, the 1st prompt evaluated the severity of the error, and the 2nd prompt classified it by type based on the results of the previous generation.

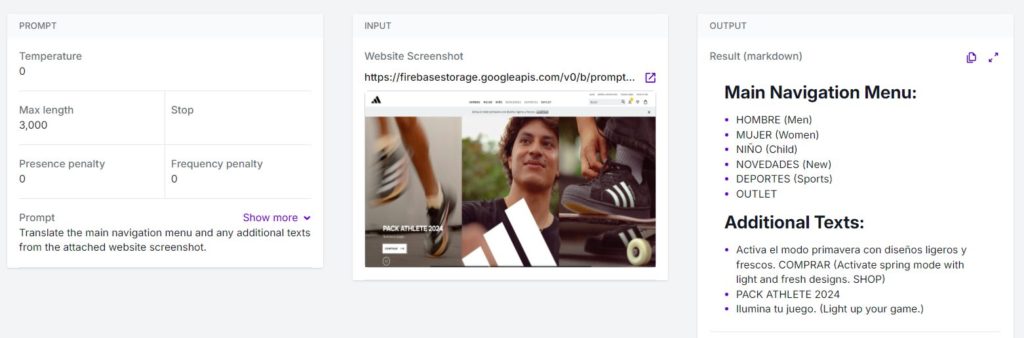

5. Vision in multimodal models

In this task, participants worked with vision model GPT-4 Turbo to complete assignments with images:

– image translation

– getting text from scanned PDFs

– screenshot localization testing

– generating multilingual product descriptions from image

– identifying fonts

etc

Example: a participant translates the menu of a sample website from an image screenshot.

6. Retrieval-augmented generation (RAG)

Using retrieval, a large language model may generate output based on facts from a linked database. Such databases include company data, glossaries, style guides, and translation memories, and the answer can be based on facts contained within, instead of relying on LLM’s general memory.

In the exercise, we used retrieval for the following use cases:

– translate with glossaries

– check existing translation for terminology compliance

– create a “chat-with-your-website” bot

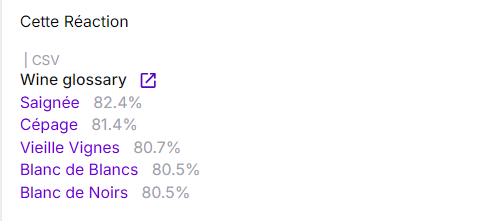

Example: for retrieval, the database splits the text into chunks, and the search of the relevant chunks is based on semantic similarity, expressed in percentage in the screenshot above.

7. Agents

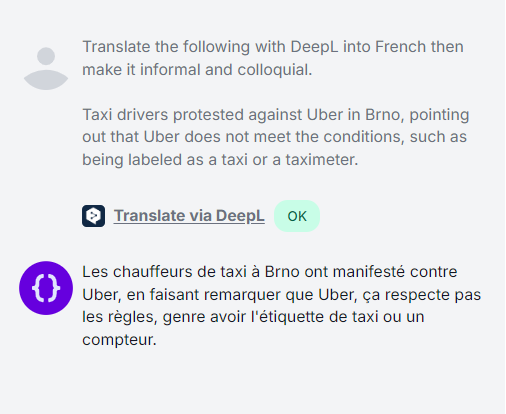

This module covered the ability of LLMs to operate external apps via the API. We explored translating with DeepL and proofreading with ChatGPT in a chat interface. While not immediately applicable to workflows, the overview of Agents showcases a potential future scenario where LLMs act as a user interface to other applications.

Example: GPT-4 Turbo calls DeepL to translate a paragraph of text.

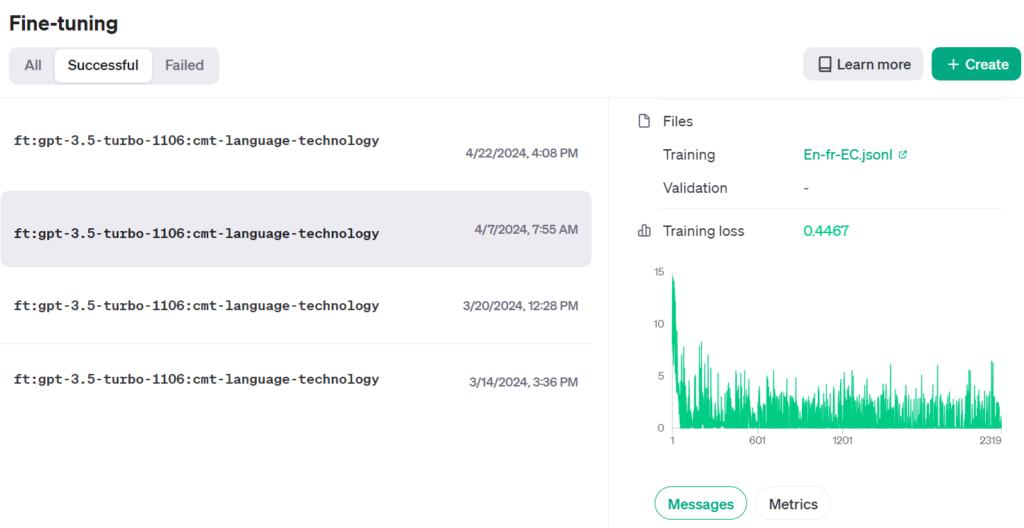

8. Fine-tuning GPT-3.5 Turbo

By giving translation memory and glossaries to GPT-3.5 Turbo, it is possible to improve its output closer to GPT-4, while minimizing the cost below the cost of conventional machine translation. In this module of the workshop, we covered how fine-tuning is done, and its impact on quality and cost of LLM localization.

Presenters: Dominic Wever (Promptitude) and Konstantin Dranch (Custom.MT).

The workshop recording is available at:

https://www.youtube.com/watch?v=MJNlhyStv14 – part 1.

https://www.youtube.com/watch?v=QPPRtquyvgQ – part 2.

Next workshop

The next installment is planned for Jun 18, 2024, before the TAUS Massively Multilingual Conference in Rome.

https://www.taus.net/events/massively-multilingual-conference-rome-2024

Comments are closed.