A Guide for Localization Managers, AI Engineers, and Researchers

Machine translation (MT) is transforming how we communicate across languages, and at the heart of this revolution are high-quality machine translation datasets. Whether you’re working with computer-assisted translation (CAT) tools, building custom MT models, or managing multilingual content, the right dataset can streamline your work, improve translation accuracy, and support scalable AI translation services.

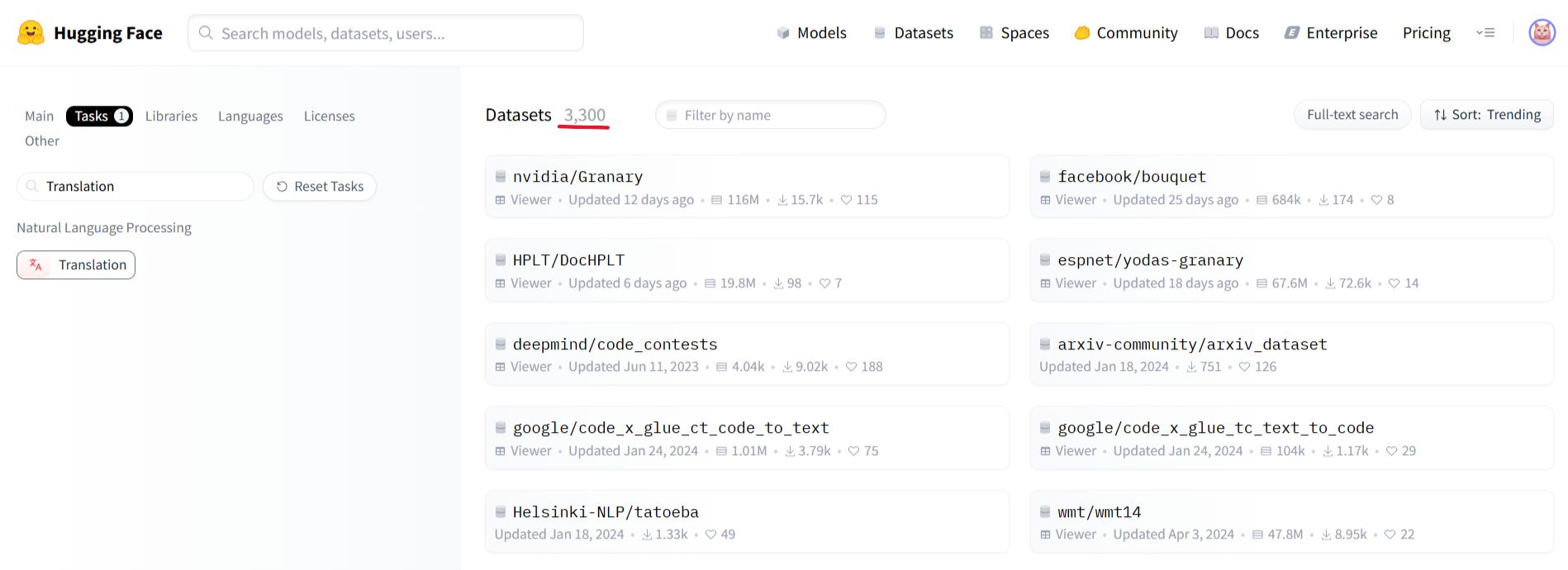

There’s no shortage of machine translation datasets today: Hugging Face, OPUS, the European Language Grid, GitHub, and Kaggle host thousands of resources, while professional providers like Translated and Unbabel offer curated options. But more data is still needed: public resources are concentrated in a few high-resource languages, leaving low-resource languages: imagine trying to train a legal MT model from Luxembourgish to Welsh with only a handful of parallel sentences.

Many corpora are also noisy, domain-mismatched, or poorly aligned, and opaque licensing (especially around commercial reuse) often adds another layer of complexity, making it hard to train reliable MT models without heavy cleaning or custom data collection. These issues make real-world MT training an art more than an off-the-shelf science.

This guide is for translators, localization managers, MT users, AI engineers, and researchers. We’ll look at the most notable machine translation datasets from our Top 100 list and highlight why they matter, how they compare, and how to pick the right ones for your work.

Bonus: Get the list of the top 100 best open-source MT datasets and the seven datasets providers.

From Classic NMT to Modern LLM Training

Previously, MT relied on large monolingual corpora with frameworks like Marian NMT or OpenNMT. Today, engineers start with large language models like Mistral or DeepSeek, fine-tuning with smaller, high-quality multilingual datasets. This makes dataset quality more important than sheer volume.

Who Uses Machine Translation Datasets and Why It Matters

In reality, it’s usually engineers and researchers who download and work directly with machine translation datasets. They rely on these corpora to train, fine-tune, and evaluate models, making decisions about which data is clean, aligned, and suitable for a given project. It’s important to note that if you use public datasets for benchmarking, results can be influenced by the fact that major companies like Microsoft and DeepL likely included these datasets in their training.

But there’s an overlooked opportunity for the rest of the localization industry. Most localization managers and translators aren’t even aware that millions of in-domain translation memory segments are freely available. While raw datasets are primarily useful for engineers, translators can also benefit: downloading a memory, editing it with tools like ChatGPT, and adapting it to a client’s preferred style or terminology. This approach is particularly valuable when entering a new domain or language, where ready-made resources can accelerate productivity and improve consistency from the start.

What Makes a Good Machine Translation Dataset?

The ideal dataset depends on your use case, but in general, high-quality MT datasets share these traits:

-

- Alignment quality – Clean, sentence-aligned source/target text.

-

- Licensing clarity – Check commercial use permissions (CC-BY, CC0, etc.).

-

- Domain relevance – Specialized corpora (pharma, legal, automotive) reduce post-editing.

-

- Evaluation-ready – Reference translations or quality scores for benchmarking (BLEU, COMET, TER).

-

- Language coverage – Critical for low-resource languages.

The Heavyweights: Large-Scale Parallel Corpora

If you need volume and variety, these datasets are the backbone of many MT systems:

-

- Europarl – Official European Parliament proceedings in 21 languages. Clean, formal, and ideal for legal/political domains.

-

- ParaCrawl – Web-mined multilingual news content in 42 languages. Great for broad-domain coverage, but requires filtering for quality.

-

- CCMatrix – Web-mined dataset with 90 languages, aligned using LASER embeddings. Great for scale but noisier than curated corpora.

-

- CCAligned – Document-aligned dataset from Common Crawl in 137 languages. Slightly cleaner than CCMatrix but still requires quality checks.

-

- WikiMatrix – Wikipedia sentence alignments in 96 languages, useful for encyclopedic and factual text.

-

- Taus – paid core collection of 7.4 billion words across 483 language pairs, including 26 low-resource languages, providing high-quality multilingual training data for MT, LLM, and AI development.

-

- OpenSubtitles – A large-scale but noisy collection of movie and TV subtitles in 94 languages, valued for its colloquial, conversational, and domain-diverse parallel text.

These stand out due to their sheer scale and multilingual diversity, making them an indispensable resource for training baseline models. They are best paired with cleaner, more specific domain data.

Benchmarking Champions: Evaluation and Low-Resource Heroes

These datasets aren’t just for training, they’re for measuring performance and targeting underrepresented languages:

-

- WMT Test Sets – Gold standard annual benchmark datasets used by researchers worldwide. News, biomedical, and low-resource tracks available.

-

- FLORES+ – 997 (dev) and 1,012 (devtest) sentences across 222 languages, ideal for low-resource MT evaluation.

-

- MLQE-PE – Multilingual quality estimation with human post-edit data, helping you measure real-world MT performance.

-

- NTREX-128 – News Test References for 128 languages, with document-level context.

-

- ACES – Annotated dataset with 68 error phenomena for evaluating MT metrics across diverse linguistic challenges, ideal for comprehensive quality estimation.

These are essential when you want standardized test sets that enable fair, apples-to-apples comparisons between MT systems.

Domain-Specific Powerhouses

If you translate into specialized fields, these datasets give your MT engine the terminology and tone it needs:

-

- EMEA – European Medicines Agency documents in 22 languages; gold for pharmaceutical and medical translation.

-

- ParaMed – Chinese-English medical corpus based on NEJM content.

-

- EuroPat – Patent corpora for technical and legal terminology in 6 languages.

-

- News Commentary – Political and economic commentary, ideal for journalistic and policy-related translations.

-

- Legal Ukrainian – Monolingual legal-domain corpus, great for domain adaptation.

These shine because they drastically cut down post-editing effort in highly specialized contexts.

Speech and Multimodal Translation Datasets

For MT that goes beyond text:

-

- MuST-C – 385 hours of TED Talk speech paired with translations into 8 languages.

-

- Europarl-ST – Parliamentary speech aligned with translations in 9 languages.

-

- CoVoST – Speech-to-text translation from 21 languages to English and back.

-

- How2 – Instructional videos with English-Portuguese translations.

-

- Libri-Trans – Audiobook-based English-French speech translation data.

These are key if your goal is end-to-end speech translation or multimodal MT, powering subtitling, dubbing, and accessibility features.

Cultural & Community-Driven Corpora

Crowdsourced or community-translated content often offers diversity and cultural nuance missing from institutional datasets:

-

- Tatoeba – Crowdsourced sentence translations in 397 languages, great for low-resource MT, such as Shona and Kabyle.

-

- Global Voices – Community-translated news articles with rich cultural context in 30 languages.

-

- Bianet – Parallel Turkish–Kurdish–English news articles.

-

- Tanzil – Quran translations in 42 languages, used in religious and literary MT research.

These are valuable for their linguistic diversity and their ability to capture localization-ready language data.

Synthetic Data for Domain Adaptation

What do you do when the dataset you need simply doesn’t exist? Synthetic data generation is becoming an effective way to build domain-specific datasets when authentic parallel material is scarce. The process typically starts with a small set of in-domain content such as a technical manual or product instructions, which is translated into the target language and reviewed by a human linguist.

This high-quality seed data is then expanded with machine learning models to produce multiple variations, often scaled up by factors of ten or more. Human reviewers refine prompts, correct errors, and perform spot-checks to reduce noise and ensure consistency. Through iterative expansion and refinement, a small initial sample can be transformed into a large, reliable dataset that accurately captures the terminology and style of the target domain.

Before You Start: Cleaning and Measuring Quality

Even the best datasets often require preliminary work before they’re useful for training. Tasks like data cleaning, de-anonymization, sentence alignment, and human annotation are common. To make informed decisions, it’s critical to look at quality statistics and metrics that show how reliable a dataset really is.

One great example is the HPLT dataset, one of the largest open text corpora ever released. You can also explore quality metrics and statistics for HLPT and other datasets with our partner tool here: HPLT Analytics.

Expert Insight from Lev Berezhnoy, Product Innovation Manager at Prompsit

Open datasets are a great start, but the richest data lies within your company. When a translation system learns from your support requests, work documents, and glossaries, it begins to speak in your brand’s voice. Data is critical today and often challenging. In one European project, we flew hard drives with datasets from Canada to avoid waiting months for petabytes of data to reach our servers via fiber-optic cables. Now, our expertise covers the entire process of creating custom datasets, and we complete all of them on time, quickly, and creatively.

Why Not Just Use the Biggest Dataset?

Bigger isn’t always better. Large-scale web-mined corpora (like Common Crawl derivatives) are great for coverage, but noisy alignments, inconsistent terminology, and mixed registers can hurt quality. The best MT pipelines mix:

-

- Massive general corpora for language coverage.

-

- Clean domain-specific corpora for terminology control.

-

- Benchmark datasets for evaluation.

Practical Tip: For legal translation, start with Europarl for general coverage, add EuroPat for patent-specific terminology, and use WMT test sets to evaluate your model. For low-resource languages, pair Tatoeba with FLORES+ to balance training and benchmarking.

The Takeaway

Machine translation datasets aren’t just backend resources, they’re strategic tools. Whether you’re improving CAT tool output, evaluating AI translation services, or building your own NMT pipeline, the right combination of datasets will:

Improve translation quality.

-

- Reduce post-editing time.

-

- Lower localization costs.

-

- Help you make evidence-based MT decisions.

Our full Top 100 MT Datasets list includes everything from ERLA Collection to Igbo-Text: curated for quality, domain diversity, and language coverage.

Ready to put your dataset to work?

Custom.MT specializes in training and adapting MT engines to your domain for maximum translation quality, even when ready-made datasets aren’t available, for example, highly specialized content like video game localization often requires custom data collection and adaptation.

Talk to our MT specialists → Book a free consultation

Take a look at our QE tool → Machine Translation Evaluation

Explore the Top 100 Datasets for Machine Translation Models — simply complete the form to receive the file by email.

Comments are closed.