Independent MTQE Benchmark Findings Across 17 Language Pairs

QE Benchmark Summary

Machine Translation Quality Estimation (MTQE) works but only when you treat it as a signal, not a switch. In our independent MTQE benchmark of nearly 8,000 real production sentences from 16 companies and 17 language pairs, we found that a well-tuned QE model can catch 87% of real translation errors while still letting 35% of clean segments skip human review. The catch? The right threshold differs by language, content type, and risk appetite but there’s no one-size-fits-all setting. Yet when configured properly, MTQE becomes an effective AI quality gate that helps localization teams balance cost, speed and translation quality.

DOWNLOADS | VIDEO PRESENTATION:

Presentation, Calculate your QE threshold

Watch the full benchmark presentation on YouTube

As companies move large volumes of content through machine translation, the next strategic question becomes unavoidable: Can you let AI (not humans) decide which sentences are good enough to publish?

This is the promise of Quality Estimation (QE): a reliable, automated quality gate that decides whether a translated segment is Fit (publishable) or Unfit (requires post-editing). But can AI really make that call? Which QE model can you trust? And what impact can you expect in real production?

This report presents the largest independent evaluation of two general-purpose LLM-based QE judges (OpenAI GPT and Claude) and four specialized MT and localization QE solutions (TAUS, Widn.AI, Pangeanic, ModernMT) to date, built on live domain data – not synthetic examples or vendor demos.

MTQE: How AI Teams Up with Humans to Check Translations

Machine Translation Quality Estimation (QE), also known as Quality Prediction (QP) or MTQE/MTQP, is the task of automatically predicting the quality of a machine translation output without a human reference translation. If the predicted quality is high, the segment can skip human post-editing, saving the time and money every localization team wishes they had.

Despite more than a decade of academic research and increasingly capable models, QE remains surprisingly under-adopted in many localization workflows. Many teams remain cautious. According to recent industry reports and experts, QE is often seen not as a fully autonomous judge but as a risk-management tool: a way to reduce, not eliminate, human review, while keeping quality within acceptable limits.

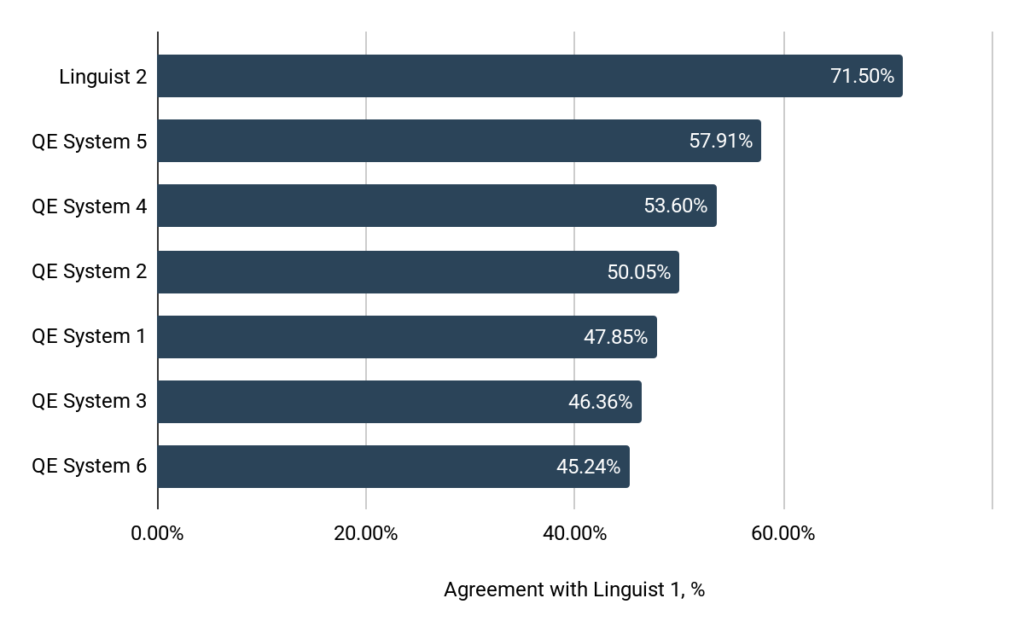

Human Linguists: Aligned Better Than Machines

Before evaluating AI QE systems, it’s important to understand how humans perform on the same task. In our analysis, two professional linguists agreed on translation quality 71.9% of the time, highlighting the inherent subjectivity in judging MT output. Even so, average agreement between human annotators was consistently higher than between any QE model and a human linguist.

This reinforces that, while AI can assist, human expertise remains the most reliable benchmark for translation quality.

AI vs Translation: Benchmarking QE Models So You Don’t Have To Guess

Compared to traditional QE leaderboards, this evaluation was designed to reflect real localization scenarios:

- 7,817 sentences from ongoing translation projects

- 16 participating organizations

- 17 language combinations

- 191 individual tests

- Six QE systems: OpenAI, Claude, TAUS, Widn.AI, Pangeanic, ModernMT

- All data annotated by experienced, domain specialist linguists

To ensure reliability, we applied strict cleanup criteria:

- Inter-annotator agreement >65%

- Removal of blanks and duplicates

- Representative mix of high- and low-quality segments from real projects

Every model was tested with the same zero-shot prompt, asking the LLM to act as a translation quality expert and score each segment on a 0–100 quality scale.

QE Thresholds: How to Tell AI What to Accept and What to Flag

When you run a Quality Estimation (MTQE) system, it usually returns a score (for example 0.0–1.0, or 0–100). But a score by itself doesn’t say whether a translation should be accepted or sent for human review. That’s where a threshold (or cut-off) comes in: you pick a value above which you trust the segment as “good,” and below which you treat it as “needs review.”

QE providers often recommend thresholds around 0.90–0.92, while research suggests that F1 scores (precision–recall balance) of ~80% are the minimum for reliable post-editing support. But what counts as the optimal threshold, and how to find it? That’s still the real challenge since the lower thresholds accept more segments automatically but risk letting errors slip through. Higher thresholds catch almost all errors, but accept few segments, limiting cost savings.

In practice:

- Thresholds vary by language pair and content type; there’s no universal rule.

- Choosing the right threshold balances risk (avoiding errors) and efficiency (reducing unnecessary post-editing).

- Safe QE deployment treats thresholds as decision tools, not convenience settings.

The Methodology: Finding the Best AI Quality Gate

Our goal was to see how QE performs in real-life settings, how models behave with recommended thresholds, and whether it is possible to identify an optimal threshold.

This involved three steps

- Establishing reliable ground truth

We only used translation segments where two human linguists agreed on the classification.

Human scores were converted into a binary reference:

- Fit: good enough for publication

- Unfit: require post-editing

- Measuring real business KPIs with statistical indicators

| Business KPI | Business KPI | Why it matters |

|---|---|---|

| Errors Detected (Recall Unfit) | % of bad segments correctly caught | Risk mitigation |

| Benefits Captured (Recall Fit) | % of good segments correctly accepted | Cost savings |

| Precision Fit | Quality of segments allowed to publish | Quality assurance |

| Precision Unfit | Avoiding unnecessary rework | Cost control |

| F1 Score | Balance of precision/recall | Overall performance |

| Accuracy | Overall correctness | Limited usefulness |

- Optimizing for business value

Threshold choice turned out to have the single biggest impact on whether model-based QE is feasible or not. We tested three thresholding approaches in the benchmark:

- Target Quality Level (80%)

Lowest threshold that still catches at least 80% of real errors while maximizing savings. - Standard Threshold (0.92)

Fixed, ultra-conservative industry setting focused on safety over efficiency. - Optimal Threshold (CustomMT)

Data-driven threshold selected to balance error detection, savings, and trust in human judgment.

Let’s first consider the industry-standard threshold, typically set between 0.90 and 0.95. In our data, these settings (0.92, median value) produced:

- 98% Errors Detected

- 5.45% Benefits Captured

- <1% actual cost savings

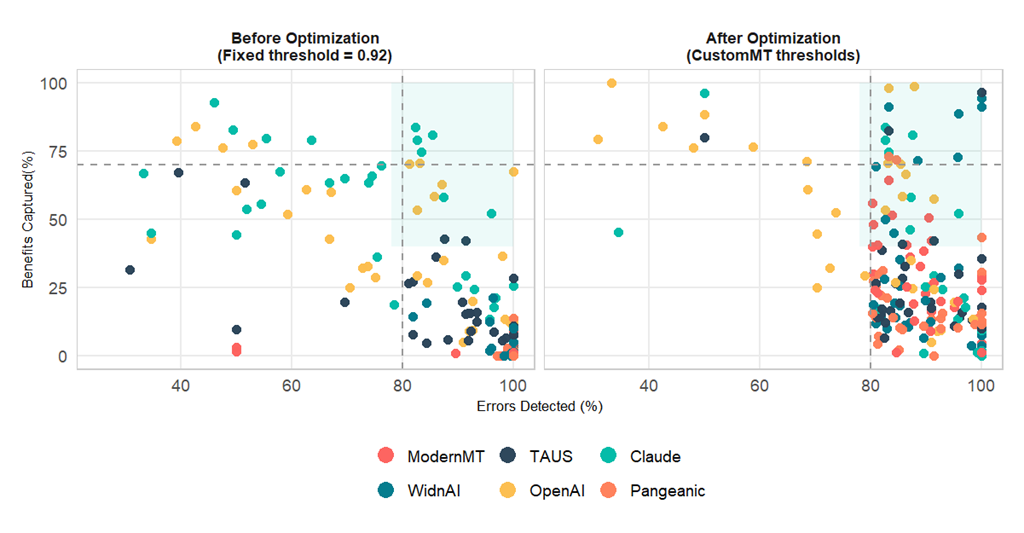

The chart below shows that the industry-standard 0.92 threshold catches nearly all errors, which is good for quality control, but leaves almost no room for capturing additional benefits.

In other words: if nearly everything gets sent to human review → zero ROI.

The 80/20 approach (catch at least 80% of errors while maximizing savings) proves far more practical and cost-effective than the strict industry-standard 0.92 threshold. However, it is not always achievable because real-world data often lacks enough score variance, which can force weaker models to adopt higher thresholds with very limited benefit.

The Custom.MT Optimization Approach

To make QE viable, we tested every threshold from 0.50 to 0.99 and scored them using:

CustomMT Score = 70% Risk Focus + 30% Savings Focus + Human Trust (correlation)

This reflects real business priorities: protecting quality comes first, but not at the cost of making QE pointless.

Three constraints were enforced for a threshold to be considered production-safe:

- ≥80% Errors Detected

- ≥10% Recall Fit

- ≥70% Precision Fit

If no threshold met all three rules, fallback logic selected the safest feasible option.

The chart below shows why threshold tuning is essential. After applying CustomMT-optimized thresholds, the picture changed. Many runs move into the high-benefit, high-safety zone (the blue area), achieving 75–100% error detection while capturing 15–45% of possible savings. This illustrates the core finding of our benchmark: QE is not inherently ineffective, instead it’s ineffective when used with the wrong threshold.

Performance at the optimized threshold: Lowering the threshold to the level with the highest Custom.MT Score resulted in median performance of 89 % errors detected and 39.3% benefits captured.

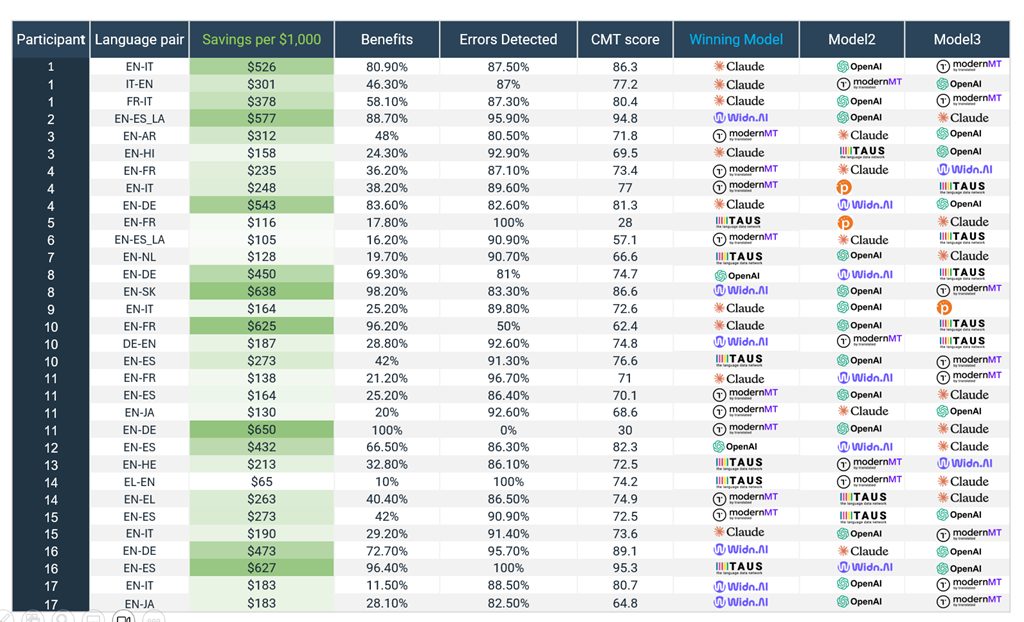

QE Providers: One Size Doesn’t Fit All

So you’ve set your thresholds — great. But should you just pick a single QE provider and call it a day? The short answer: no.

Our benchmark shows that no QE model consistently wins across all language pairs and content types. Some specialized models perform exceptionally well for certain languages, but fail in others.

For example, Claude excels at EN‑IT (IT‑EN), FR‑IT, and EN‑HI, consistently detecting errors and capturing benefits, but fails in DE_EN and EN_SK. Widn.AI, in contrast, performs best for EN‑SK and DE‑EN, showing strong reliability for these specific language pairs but not consistently across others.

While OpenAI and Claude are general-purpose and language-agnostic models, they emerged as top performers across more language pairs, often looking like clear winners from a pure scoring perspective.

The specialized QE systems tell a different story. While they may not win across as many language pairs, they are designed specifically for MT production. This makes them easier to deploy, monitor, and scale in real-life translation environments.

The takeaway: There is no single “best” QE provider for all scenarios. General-purpose models like OpenAI and Claude often win on raw scoring across many language pairs, but they lack ready-to-use localization workflows. Specialized QE systems may shine in fewer language pairs, yet they are built for MT production and are easier to deploy, control, and scale. The most effective strategy is not to choose one winner, but to combine the right QE for the right language pair, domain, and risk profile, maximizing quality and savings without relying on a single tool.

What You Can Get from Quality Estimation in Localization

When you tune QE thresholds for real business outcomes instead of relying on industry defaults, you start to see how different systems shape ROI in very different ways.

| Model | Opt Threshold | Benefits _Captured | Errors_ Detected | Errors Slipped | Precision_Fit | Precision_Unfit | Accuracy | CustomMT_Score (F1 based) |

|---|---|---|---|---|---|---|---|---|

| ModernMT | 0.81 | 31.1% | 86.2% | 13.8% | 79.2% | 36.7% | 48.5% | 63.9 |

| Widn.AI | 0.85 | 35.2% | 86.3% | 13.7% | 74.6% | 38.7% | 56.0% | 67.7 |

| TAUS | 0.87 | 32.4% | 82.4% | 17.6% | 79.3% | 35.2% | 53.0% | 64.3 |

| OpenAI | 0.88 | 52.2% | 74.1% | 25.9% | 78.9% | 45.9% | 59.6% | 64.4 |

| Claude | 0.89 | 27.8% | 88.3% | 11.7% | 69.4% | 38.0% | 50.6% | 63.9 |

| Pangeanic | 0.73 | 22.4% | 86.1% | 13.9% | 69.9% | 33.8% | 45.2% | 60.9 |

At optimal settings (mean values), some models like OpenAI and Widn.AI unlock the highest measurable benefits (35–52%), meaning they let more low-risk content flow through without human touch. Yet they still keep error detection at solid levels: 74-86%. That’s the sweet spot most localization teams are hunting for today: more automation without jeopardizing quality.

Others, like Claude, TAUS, and ModernMT, lean more conservative: they maintain higher error-catch rates (82-88%), but they give up some of the automation gains. These systems behave more like “safety-first copilots”: great when quality risk is the dominant concern, but not the engines of major cost reduction.

When you look at precision-fit, the standouts are ModernMT, TAUS, and OpenAI (≈79%), showing they’re the most reliable at correctly identifying genuinely “fit-to-skip” segments, while Claude and Pangeanic sit noticeably lower, reinforcing why they struggle to deliver confident automation.

You also notice that lower thresholds don’t guarantee more efficiency. There are models, despite the low thresholds, deliver the smallest benefit gain and the weakest precision, meaning they greenlight more content but not always the right content. That’s the trap many teams fall into when threshold tuning is done blindly.

Next Steps: Using QE, What We Learned, and Where We Go From Here

This benchmark took far longer than we expected. If you thought linguists arguing about translation quality was intense, try adding business analysts into the mix. We spent hours debating which data is reliable and how to convert human evaluation scores into machine-readable signals. But it was worth it: we now have a solid foundation for measuring QE performance in real production settings.

So what’s next? Our plan is clear:

- Annotate the errors QE misses: Identify which mistakes slip through the AI net.

- Assess severity: Understand which misses are minor annoyances and which could damage quality or compliance.

- Experiment with QE workflows:

- Explore chaining multiple QE models to see how it affects results. For example, run a high-recall “unfit” QE first, then a high-precision “fit” QE, or run two or more QEs in parallel and send to human post-editing only the segments flagged by all systems.

- Another option is a staged workflow: high-recall “unfit” QE → automatic post-editing → high-precision “fit” QE → final human review.

Spoiler alert: we also conducted a short analysis on annotated open datasets using the same QE providers. So, we know, that OpenAI GPT and Claude tend to miss more minor (27%, 21%) and critical (24%, 19%) errors than major ones (16%, 12%). Knowing where the models stumble is essential for understanding the next layer of risk.

Acknowledgments

We sincerely thank all the companies that participated and the linguists who generously contributed their time to create, annotate, and share data. We are especially grateful to HumanSignal for providing access and support for working with data in Label Studio Enterprise.

Follow our blog or get in touch to join our next research and stay updated on practical insights in GenAI translation.

Frequently Asked Questions (FAQ)

There is no universal best QE. General-purpose LLMs (like OpenAI and Claude) often score well across many language pairs, while specialized QE systems are easier to deploy and manage in localization workflows. Benchmark multiple systems on your own data for best results.

No. Default thresholds are usually overly conservative. Threshold tuning based on your data has a much bigger impact on ROI than the choice of QE model itself. You can try threshold optimization here: https://custom.mt/join-qe-benchmarking/ to get a summary of the best QE models for your content and their recommended thresholds.

For reliable QE benchmarking, use real production MT data with roughly 50–70% good segments and 30–50% bad segments, reflecting real language pairs and domains, avoid extreme imbalance that would distort risk and savings measurements, and aim for at least 300 sentences for meaningful results.

No. QE thresholds depend on language pair, domain, and content type. A threshold that works well for one scenario may let errors slip or block too many good segments in another. Regular monitoring and threshold adjustment are essential to balance risk and automation benefits.

Yes. You can chain or parallelize QEs, for example, by flagging segments for human review only when multiple systems agree, to better balance risk and efficiency.

No. QE works best as a quality signal, not a switch. It reduces unnecessary human review while keeping linguists in control of quality.