Author: Giada Pantana

What is machine translation?

Nowadays the world of translation is turning more and more to machine translation for several reasons. Before going through that, though, we need to make the point of what machine translation is.

Machine translation involves knowledge from various fields of study, which go from Computational Linguistics to Artificial Intelligence and Computer Programming. Machine translation uses computers to automatically translate from one natural language to another. The goal of this research field is to produce a fully automated system that delivers a publishable-quality translation. Ideally, without any human intervention. As it is now clearer, we can guess the reasons why machine translation has become more and more central in the language market. It makes the Language Service Providers (LSPs) and freelance translators earn time and consequently reduce costs. Also, this kind of automated translation helps professionals in respecting terminological consistency. This is especially important when translating technical and specialized texts.

Has it always been as irreplaceable as it seems today? Absolutely not, let’s see why.

The initial phases of machine translation

As a little baby moving its first steps, even machine translation began from scratch.

Translation in itself was present since ancient times. The need for automated processes grew more and more into the society as the market requested larger volumes of translation. In the 20th century, it seemed that the technical conditions were there to light the fuse.

During this period, a Russian inventor Pëtr Petrovič Trojanskij designed a machine able to translate from language A to B. The technogy required the help of two native speakers, respectively of language A and B. This kind of invention was beyond mechanical dictionaries. It presupposed a very advanced linguistic concept for that time: that all languages had a common logic structure. This “common ground” was the “key” to automatically translate from one language to another.

In the following years, the computer was invented and a milestone in history was unlocked. Alan Turing decrypted German encoded messages with Enigma. This huge progress gave a strong boost to the research in machine translation.

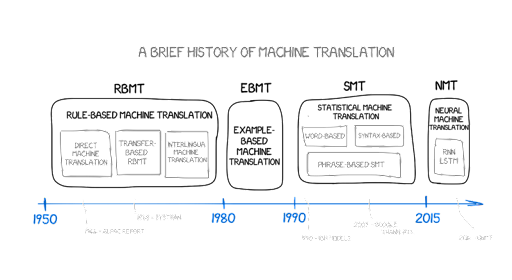

First step: Rule-Based Machine Translation

From that moment on, machine translation has known different phases. The first one is the Rule-Based Machine Translation (RBMT) model.

At this stage in time, language was still considered a code. Linguists focused on designing grammatical and language rules that could convert the text from one language into another.

The first experiment was made by Peter Sheridan from IBM, one of the first IT companies, and Paul Garvin from Georgetown University, Washington D.C., USA. This test dealt with the translation of some Russian sentences from a chemical book into English, using a machine containing a vocabulary and a few grammatical rules. The output was impressive! The machine succeeded in the translation, and this result evolved into new funding and interest in this field. These inputs also reflected an enhanced spirit in formal linguistics and semiotics research.

Since this field started from almost nothing, this experiment was considered a great achievement. Despite the sparks, though, it has to be said that translation with rule-based models had several problems, requiring a huge part of human post-editing. The major issues were:

- When sentences evaded from “the rule”, the machine failed;

- Linguists had to create all the rules and language models. So it was very expensive to update the model or to add new languages;

- Translation outputs had serious problems with fluency (word order for most of the time), so that they seemed quite rusty.

Disillusionment

From these difficulties, researchers realized a hard truth: languages cannot be reduced to codes.

Linguistic problems became more frequent and turned in an insuperable barrier that was soon to be identified: the semantic barrier. The systems developed until that moment didn’t seem to be good enough to give back a satisfying level of translation. This disillusionment hit so strong that in 1966 the ALPAC, Automatic Language Processing Advisory Committee, announced the end of experimentation, defining machine translation as “slower, less accurate and twice as expensive as human translation” and that “we do not have useful machine translation [and] there is no predictable prospect of useful machine translation”

Second step: Statistical Machine Translation

How did Machine Translation recover from this hard judgment? This conclusion was neat and came from one of the most eminent entities of the field. The direct consequence was a substantial stop in the funding; only little communities of research continued inquiring in this field.

Nevertheless, Linguistics never stopped expanding and developed a new branch, Corpus Linguistics. A corpus is a collection of specific linguistic data, selected according to defined criteria in order to represent a language sample.

In the last part of the 80s and in the early 90s, this branch of Linguistics became the launching pad of a renovated research in machine translation, which used bilingual parallel corpora as the core from which Statistical Machine Translation (SMT) emerged.

The model could make translations from statistical calculations based on the data the machine is fed with. This type of machine translation worked better in respect to the rule-based, since the corpus was built on already existing translations, so fluency issues are less frequent. but for all the segments that didn’t exactly resemble the ones in the corpus, the machine didn’t give accurate results. The hardest languages to translate for this model were the ones that had very different word orders, like for languages belonging to different language families. Another hard point was the dependency of this model’s success on the availability and quality of the corpora: to use a large number of high-quality corpora to train the machine results in a more effective translation engine.

Third and last step: Neural Machine Translation

The attempts to fix these problems led to the current state of the art, that is Neural Machine Translation (NMT). Research in NMT started in the late 90s. It introduced the artificial neural networks in the previous model, Statistical Machine Translation.

The artificial neural network is a recreation of how our brain works. Basically, we give the machine some data to work with, so that it can automatically predict an output. What neural networks do is an inference.

Is it enough to call machine translation a “mission accomplished”?

Yes and no. Machine translation engines at the state of the art cannot yet fully replace human translators. But still MT outputs are not even that bad. In less than one century, we started from almost 0 and arrived at a good level of translations, which is most evident in texts with a recurrent structure, like documentation (e.g. patents or contracts).

Also, thanks to the Attention mechanism, the NMT technology has limited the ominous and well-known problem of machine translation: word order. The Attention mechanism is able to “weigh” the words. On the one part, it deals with word order, and on the other, with handling the problem of long references.

Nonetheless, Neural Machine Translation still has some linguistic barriers, such as mistranslations and tone of voice mixing; but also technical ones, like the quantity and quality of training data. To minimize these issues, the engine must be trained, so that it “learns” new solutions on how to fix these errors.

Today, many companies offer this service to avoid the machine proliferating errors. Custom.MT could help you build your own MT model, check our mission at: https://custom.mt

What’s next?

Future’s already here because NMT is continuously implemented to get the best out of this instrument. During the recent conference AMTA 2022, big companies talked about a dynamic adaptation of neural models, so that the training phase is always active. This is a concrete accomplishment for translators, because it means that every time we do a Machine Translation Post-Editing (MTPE), the machine is learning our corrections and it wouldn’t possibly make the same mistake twice.

The strength of this field is its multidisciplinarity. The tight collaboration and combined efforts of IT engineers and programmers, linguists, and LSPs are contributing to the professional community. Increasing these machines’ reliability and helpfulness makes the job of language professionals easier, faster, higher in quality and more precise.