Summary:

- GPT-4 can detect fluency and accuracy errors with up to 80% agreement with human linguists.

- Chain-of-Thought prompts outperform long prompts and reduce false positives.

- AI performs best on non-creative content (e.g., travel, news).

- GPT-3.5 Turbo is significantly less reliable for LQA use cases.

- GenAI is most effective in human-in-the-loop workflows for pre-labeling and scoring translation errors.

This article details tactics to detect and label translation errors with GPT-4 AI model and to build bots for language quality assurance.

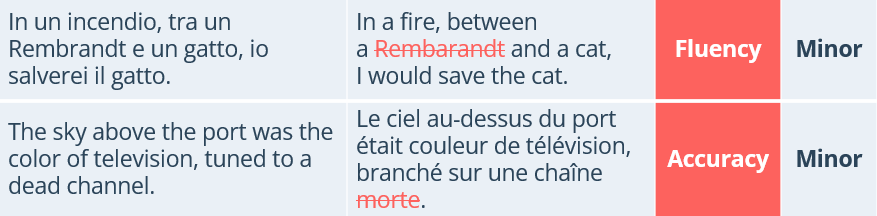

In the example of error labeling above, Rembrandt’s name is misspelled. That’s a Fluency error – not something critical impeding our understanding, but looking sloppy on a marketing piece that refers to a work of art. At the same time, on the second line, which opens William Gibson’s novel Neuromancer, the French machine translation is incorrect: a “dead” channel in French is défunte, not literally morte. It’s an Accuracy error.

Industry exploration of ways to adapt OpenAI’s models for quality assurance has begun with the GEMBA metric from Microsoft researchers Christian Federmann and Tom Kocmi, and the paper “Large Language Models Are State-of-the-Art Evaluators of Translation Quality” published in May 2023. Since then, building applications with Gen AI has become easier, and technically savvy localization teams shed fewer tears adopting this technology.

Before you go on to learn more about the state of quality estimation today, check out our new tool for automated QA. The video below demonstrates how you can easily asses output quality using it. To learn more, request a personal demo at https://custom.mt/book-a-call/

Automated MT quality estimation (QE) tool by Custom.MT

80% Agreement

At Custom.MT, we ran our tests around Christmas of 2023 and targeted three types of content: entertainment, travel, and news publications. In the most successful test with travel content, the AI annotator agreed with humans in 80% of cases, which was very close to the level of inter-annotator agreement between skilled humans.

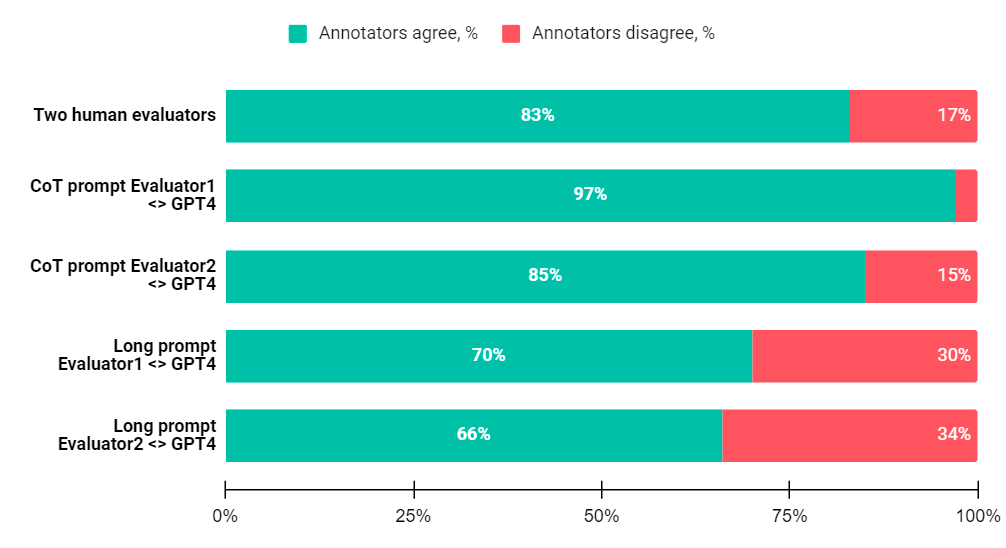

The chart above shows results for two prompts for GPT-4 that our engineer Emir Karabiber used to evaluate machine translation. The sample consisted of paragraphs depicting tourist destinations, and the language pair is English to Italian. Two Italian linguists evaluated machine translation before the test of language quality with Gen AI, and as indicated in the first bar in the chart, they agreed with each other’s judgments in 83% of cases.

For the GPT evaluation, the engineer designed two prompts:

- a Chain of Thought (CoT) prompt. It’s a series of short individual queries using a tree-like decision structure built with a Python script. For example, “Are there translation errors in the {text} below?”, if yes: “The {text} contains a translation error. Classify error by type”, “The translated {text} contains an {Accuracy} error, please rate the severity: Minor, Major, Critical”.

- A single 200-word prompt with all instructions, examples, and the error typology definitions combined.

The long prompt hit an agreement level with humans of 64% and 70% respectively, and didn’t prove very useful. We also discovered there were multiple false positives imagining errors. Long and detailed instructions made the model find problems in the text where there were none.

The chain of CoT prompts with short and unambiguous instructions produced excellent results, agreeing with one of the evaluators almost completely, and up to 85% with the second human colleague. This pattern of getting better results with a chain of shorter prompts repeated itself with the other types of content.

AI is Less Suitable for Creative Content

In the test with entertainment content, we recorded a less inspiring performance. The average agreement of the GPT with human annotations was 63%. OpenAI’s GPT-4 was adept at separating sentences without errors from those that contained errors, but its judgments veered away from human annotations when deciding on error types and severities. One reason for the difference is that entertainment content is very creative and it is sent to the model in isolated phrases without context.

Main Test Results

Completing our first round of testing, we drew the following four conclusions.

- GPT-4 is capable of performing automated quality assurance. It works especially well in detecting errors but needs improvement in classifying them by type and severity.

- GPT-3.5 Turbo lagged far behind GPT-4.

- A chain of short prompts with short clear instructions worked significantly better than a single detailed prompt

- Agreement levels with human annotations differed across various types of content. Our prompts were less efficient with highly creative content.

Business Impact of Gen AI Language Quality Assurance

Because generative AI models hallucinate and don’t assume responsibility for their output, for the moment, their practical use is in a human-in-the-loop workflow. Gen AI model may detect and annotate errors in an LQA and then pass them back to the linguist. Such an approach can help to reduce the need for revision by a second pair of eyes. Hence, it will speed up and optimize the LQA services.

In machine translation evaluation, a Gen AI can help by generating automated reports on fine-tuned engine performance. Areas such as tone of voice, readability, geopolitical, and cultural nuance were hard to crack with older technology built on regular expressions. With Generative AI, localization teams may add these categories to LQA metrics and address them.

Furthermore, pre-labeling errors will streamline MT evaluations with multiple experts. Annotators often make different calls on error types and severities, that’s why in professional evaluations, we advise to go with 2-3 or more subject matter experts and then calculate their agreement with Cohen’s Kappa and Fleiss’ Kappa formulas. To increase the consistency of judgment in the first place, we envision an AI assistant built with GPT to detect and pre-label errors according to the selected typology.

Takeaways

The conclusion for our test is a call to action for a broader collaboration between localization departments, which could lead to a deeper understanding and knowledge spreading on the topic. The two most prominent use cases for language quality with generative AI are text and image (localized screenshots). Do run such a test in your organization, and share the results.

In 2024, ISO published the newest standard on translation quality evaluation. Now, language models can follow up and power applications that will industrialize it. Perhaps, the language industry may finally solve the puzzle it has been working on since the 1990-es.

* * *

By: Konstantin Dranch and Emir Karabiber

Frequently Asked Questions

GenAI language quality evaluation uses large language models like GPT-4 to detect, classify, and label translation errors. The model evaluates fluency, accuracy, tone, and terminology issues and provides structured feedback for linguists.

In our tests, GPT-4 reached up to 80% agreement with human annotators on non-creative content. It was especially strong at identifying whether an error exists, though less reliable at rating severity.

A Chain-of-Thought (CoT) prompt with short, clear, sequential questions performs best.

Structured text such as travel, news, and technical content—produces the highest evaluation accuracy. Creative content (entertainment, novels, slogans) leads to lower agreement.

Not yet. GenAI is most effective as part of a human-in-the-loop workflow, pre-labeling errors and speeding up review. Final judgment and severity scoring still require human expertise.

AI can automatically generate quality reports, identify error patterns, and add new evaluation dimensions such as style, tone of voice, and cultural nuance—areas that were difficult to automate with earlier systems.

Bonus: Localization Error Typologies

Typologies range from short lists with 7 points (out of which 3-4 are commonly used) to 50+ error type definitions in MQM-Full. Since a human mind can hold a limited number of definitions, selecting the right number of error types can be hard. It requires constant balancing between achieving theoretical accuracy and practical usability.

LISA QA model (1990-s). This progenitor of other metrics introduced the scorecard concept with error types and weights. LQA software had been built around it.

SAE J2450 Translation Quality Metric (1997-2001). Automotive companies General Motors, Ford, and Chrysler agreed on a common way to score translations,. Eventually, after four years of debate standardized it under the Society of Automotive Engineers. It comprised seven error types: wrong term, syntactic, omission, word structure or agreement, misspelling, punctuation, and miscellaneous error.

Multidimensional Quality Metrics (MQM, 2012). Developed by Arle Lommel at the German Center for Artificial Intelligence (DFKI) as a part of a European Union-funded program. This typology has become the source for most modern typologies.

TAUS Dynamic Quality Framework (DQF). Launched in 2012, harmonized with MQM, and built into a software piece several enterprise programs adopted.

ASTM WK46396 (2014) – Arle Lommel continued his work from DFKI under a US organization.

ISO 5060 (2024) – Evaluation of Translation Output. The most recent ISO standard published, culminating a 3-year work of the relevant technical committee 37.