Machine Translation Quality Estimation (QE) is a method to triage good and bad machine translations with the goal of reducing translation costs. In October, a translation company claimed they recently achieved savings of 35% in a $1 million localization contract. This is one of the first public proofs of the economic benefits of QE, and it might signal other localization teams to adopt it. Dubbed the next “game changer” after machine translation and translation memory, quality estimation may reduce costs at the risk of leaving some MT errors undetected.

First Case with Financial Benefits

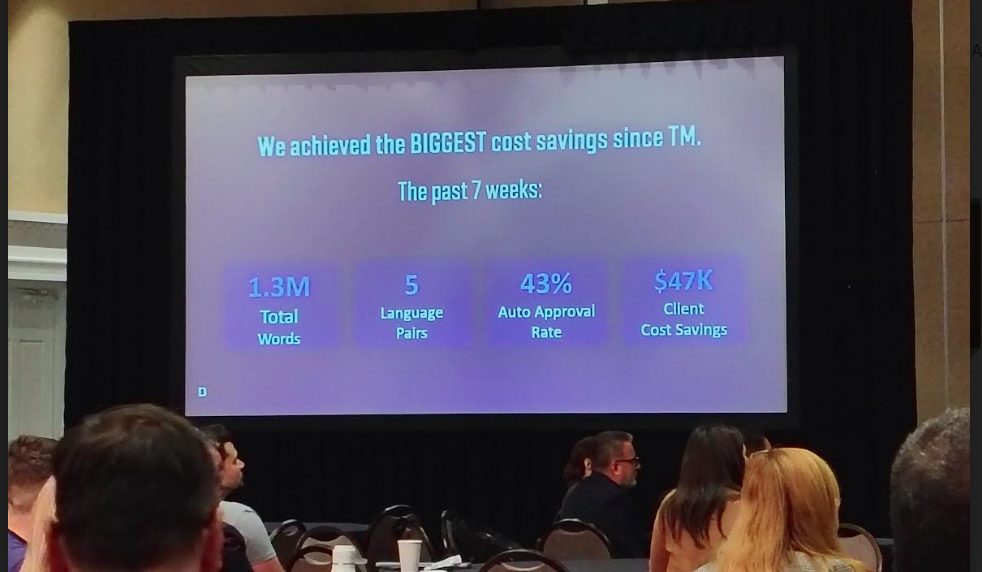

At the TAUS 2024 conference in Albuquerque, Andrew Miller presented an experiment at BLEND — a translation company in Israel. Their customer translated 1.9 million sentences (strings) with machine translation and had a thorough review done. In the review, they saw translators edited only ~15% of strings, leaving the rest untouched. The client decided to automatically detect sentences unlikely to be edited by human reviewers, and stopped reviewing beyond a certain confidence threshold. The resulting cost saving was 35%, economizing $47k in the pilot project and predicting to save 7x as much per year. The impact on quality was not measured.

This is a landmark case because it claims clear economic benefits. Machine translation quality estimation is 8 years old; it dates back to H. Kim and J.-H. Lee’s experiment in 2016 and even earlier. Since then, translation companies and in-house localization teams explored this technology, including Uber, Welocalize, TransPerfect, RWS, Lilt, Smartling, Phrase, MotionPoint, and others. Every research published mentioned scores and correlations, but none disclosed an actual USD impact.

Example cases:

- Capstan: a translation company in Belgium

- MotionPoint: a website translation company

- WMT: a scientific competition between QE systems

- Microsoft/ADAPT Center

- Huawei

BLEND’s case speaks the language of translation and business managers. It provides an incentive for teams that run localization programs with budgetary constraints, which is a common case in the industry in 2024.

How MTQE Works

In popular languages, machine translation (MT) is often so good that some sentences require no editing. If a sentence is correctly translated at first pass, in theory, there is no need for expert linguists to review it. QE predicts the quality of machine translation automatically, so that correctly translated sentences may be excluded from the batch of work that humans need to look at. Translation tools lock high-confidence QE sentences similar to 100%-translation memory matches and thus reduce the scope of the review task.

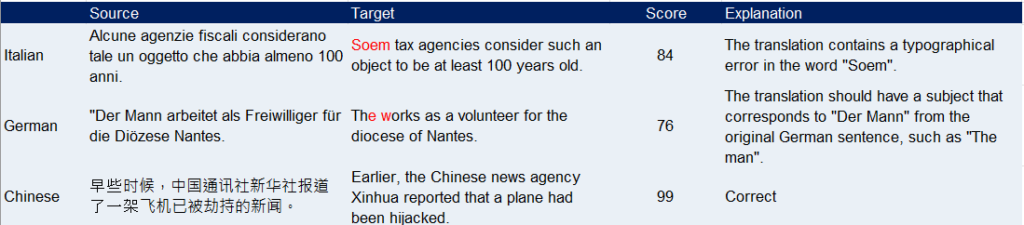

In this example above, QE detects a spelling error “Soem” in the first sentence, the omission of the subject in the second sentence, and rates the third sentence as correctly translated. Typically, QE provides scores from 0 to 100 (Unbabel, GEMBA) or from 0 to 1 (TAUS, ModelFront, ModernMT). Users set their own threshold to triage correct translations with high confidence from incorrect or low confidence. The common industry practice is to go for scores of 90% or higher and review everything below.

Under the hood, machine translation quality estimation tools use language models, for example, in a quick scenario it is possible to prompt ChatGPT to act as a quality assurance specialist.

Example prompt (GEMBA-MQM framework):

(System) You are an annotator for the quality of machine translation. Your task is to identify errors and assess the quality of the translation.

(user) {source_language} source:\n{source_segment}\n

{target_language} translation:\n{target_segment}\n \nBased on the source segment and machine translation surrounded with triple backticks, identify error types in the translation and classify them. The categories of errors are:

accuracy (addition, mistranslation, omission, untranslated text)

fluency (character encoding, grammar, inconsistency, punctuation, register, spelling)

locale convention (currency, date, name, telephone, or time format) style (awkward)

terminology (inappropriate for context, inconsistent use)

Non-translation

Other

no-error.\n

Each error is classified as one of three categories: critical, major, and minor. Critical errors inhibit comprehension of the text. Major errors disrupt the flow, but what the text is trying to say is still understandable. Minor errors are technically errors, but do not disrupt the flow or hinder comprehension.(assistant) {observed error classes}

Instead of relying on ChatGPT, specialized QE companies fine-tune open-source models like Mistral or Llama 2 with examples of the client’s translation errors and corrections. For example, Unbabel fine-tuned Llama 2 to create their newest QE engine TowerX. These fine-tuned smaller models are faster and cheaper to use at scale than OpenAI’s ones.

Paths to Adopt QE

For translation buyers, there are three options on how to add a quality estimation system.

Via a translation management system (TMS) with a built-in quality estimation model. This way the translation buyer controls the technology, imposes it over the supply chain, and is able to maximize the savings. Examples include Phrase TQI, Smartling, and XTM QE systems. The downside is that the buyer needs to take care of the technology, and may face strong resistance from suppliers who lose revenues and profits. The suppliers may change pricing, invoke contract clauses and content accuracy scores.

As a standalone component. Specialized companies such as TAUS, Unbabel Tower, and ModelFront focus on QE development and offer customized models that are more accurate with client content. In this case, integration engineering between QE and TMS is required, and while the accuracy may be higher, so is the effort to operationalize.

Via a LSP. To simplify the technology stack, the buyer may request their translation company to test and adopt QE, and in the case of success, they split the benefits. This simplifies procurement and leads to faster adoption, because the LSPs will resist less if they, too, stand to benefit from adding QE.

In every scenario, adopting quality estimation is a trade-off: the buyer receives some economy from QE but increases the risk of leaving machine translation errors undetected. Humans are not perfect, and they too may fail to detect and fix machine translation errors, especially when working under time and budget pressures. However, in case of a human translator’s failure, a single person may be responsible for it, and in case of a system failure, the whole organization has the liability. It is, therefore, important to test QE thoroughly and avoid relying on it in scenarios that involve high-stakes content that can pose hazards to human life and finances.

Technical Requirements — TMSs need to add more QE integrations

Adopting QE requires integration with a translation management system (TMS):

- to create a flow of source and translated sentences to the model and back

- to lock out good-quality translations from editing by humans

- to flag poor-quality translations

- to display scores and error explanations to the linguist.

Today, the choice of QE models integrated into TMSs is limited. Translation management systems see QE as a feature, and they want to develop internally rather than invite third-party components. That’s why Trados, Phrase, Smartling, and XTM have focused on creating their own models. In contrast, memoQ and Crowdin followed an ecosystem approach and integrated external QE systems.

| TMS | QE System |

| RWS Trados | Own |

| memoQ | TAUS, ModelFront |

| Phrase | Own |

| Smartling | Own |

| Transifex | Own |

| XTM | Own |

| Crowdin | ModelFront |

| Lokalise, Wordfast | Not integrated |

| – | ModernMT, Unbabel, Kantan, Omniscien |

A possible downside of relying on the TMSs’ own QE occurs when such models are used in combination with machine translation engines that were trained on the same datasets. When using the same training data, the estimations may be skewed and this leads to higher scores that let a higher number of errors slip.

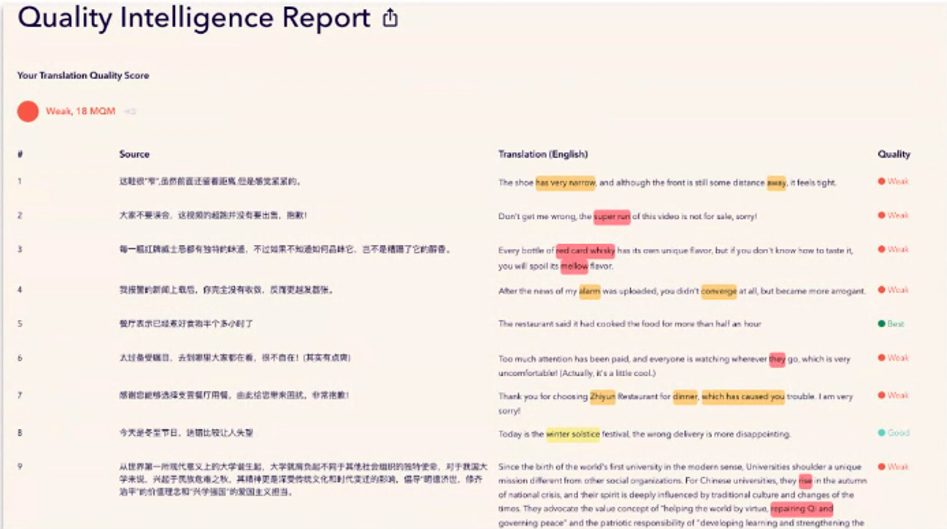

Translation companies and teams that developed their own translation toolsets, like Straker and Unbabel, have built CAT systems with quality estimation in mind. For example, in the screenshot below, Unbabel’s translation tool highlights words in the sentence it predicts as poor translations. This gives the linguist a lot of visual clues on where to focus their attention.

However, translation company tools are not open to their competitors. A buyer who works with two translation companies can’t provide the tool of the first one to the alternative supplier.

As a result of early-stage partnerships between TMS, QE providers and LSPs, buyers find multiple tools for quality estimation available to them, but they are not free to quickly adopt them without changing either a TMS or an LSP. They can’t easily pick the one that works best for them, and agree with their linguists in 95% or more of the cases.

Custom.MT experience with QE

At Custom.MT, we started our journey with QE by creating a tool that compares different models with user content and measures the percentage of sentences where the human judgment and the model agree with each other.

We follow this workflow to measure the accuracy of QE systems:

- Upload a sample of correctly translated sentences (300 is a representative sample).

- Upload a sample of sentences with translation errors.

- The tool generates the scores with 4 engines: TAUS, Unbabel, ModernMT, and ChatGPT.

- Determine a threshold where the model may be trusted, for example, 90.

- Measure the % of correctly translated sentences with a score of 90.

- Measure the % of sentences with errors below that score.

The output comparison will look like this:

| Model | Item | Accuracy |

| QE model 1 | Benefit — accurate translation scored as accurate | 75% |

| Risk — sentences with errors detected | 80% | |

| QE model 2 | Benefit — accurate translation scored as accurate | 89% |

| Risk — sentences with errors detected | 35% |

The most important factor for most clients is minimizing the risk of errors left undetected. So when choosing systems, the lowest risk will be the key factor determining which API to pick.

The comparison may be re-run at various score points, for example, if risks are high at the threshold of 90 points, we recalculate at 95 points and compare again.

We recommend buyers who are looking into quality estimation to start with these tests to establish a benchmark of risk and reward, and to evaluate how adopting this technology will impact their supply chains. Take the first step by requesting a demo of our automated QE tool.