Tools for Data Labeling in Machine Translation Evaluations

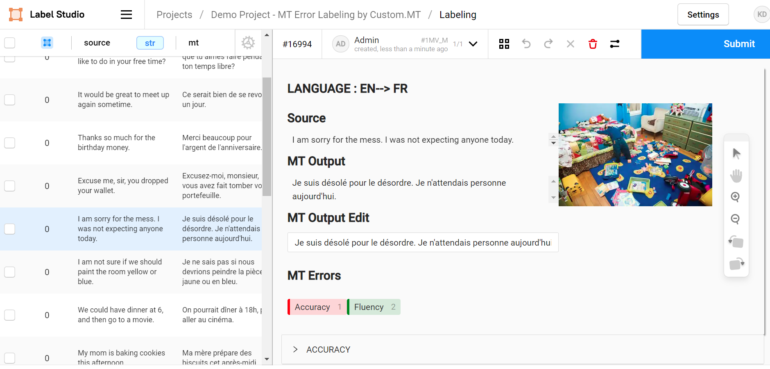

Running professional human evaluations of machine translation performance requires detailed methodology and software tools to streamline the process. The most detailed approach to evaluation today is through labeling data such as errors and assign weights to critical issues using a variation of DQF/MQM ontology. In this article, we outline Custom.MT’s journey to selecting and implementing […]