Founded in 2005 as a think tank, TAUS has turned into a prominent marketplace for machine translation training datasets, and a data services provider. Gone are the days of hosting conferences, the TAUS team is now busy collecting text and speech data for the likes of Google and Microsoft in a crowded space alongside Appen, Scale, Lionbridge, and others. We have discussed the reasons for this change, and the opportunities in the language data market in an interview with TAUS’ founder and localization icon Jaap van der Meer.

TAUS Story

At a LocWorld conference in 2005, Jaap convinced several enterprise localization managers to pool their translation data to advance machine translation – statistical MT at the time. Initially, the Society participants were paid with “data for data”: users uploaded translation memories and received credits to download other people’s data. The repository of human translations quickly grew to 75 billion words and fueled a rise in quality for translation providers.

Problems hit quickly. Hungry for high-quality data, new users joining TAUS poured junk translation memories into the repository to gain download credits. In parallel, websites all over the Internet began to publish raw MT, technically indistinguishable from human translation. Within a few years, a practitioner worth its salt couldn’t trust any third-party dataset anymore. This prompted TAUS to introduce data filtering and cut down 86% of data in the repository: the catalog today comprises 10 billion words.

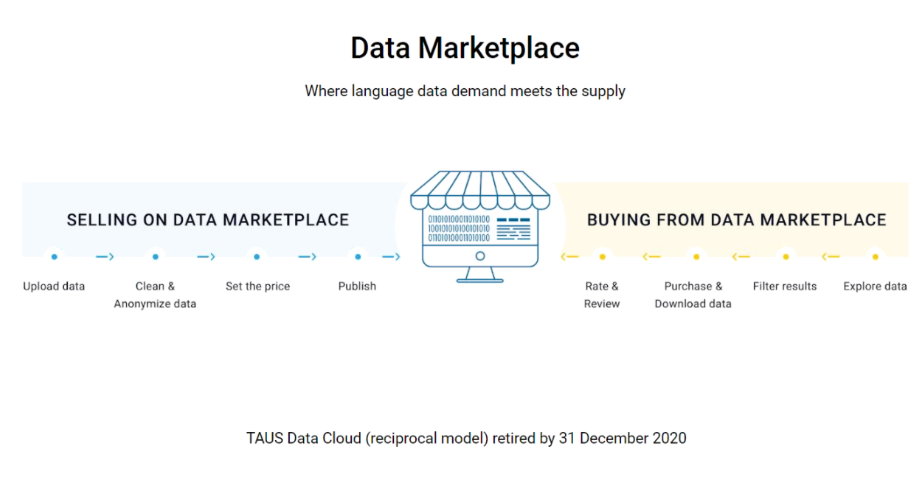

Jaap got convinced that the reciprocal model wasn’t working anymore. Instead, the TAUS team became intrigued by the idea of a marketplace open to anyone without the need to upload data. In a marketplace, smaller actors like individual translators and LSPs would be able to monetize their translation memories. TAUS teamed up with Translated and the Italian research facility Fondazione Bruno Kessler to secure funding for the marketplace from the European Commission. By November 2020, the first version went public.

It drew the interest of big tech companies, including Microsoft, Google, Amazon. Their data buyers kept sending requests for more unique and targeted data, and these requests prompted TAUS to take the next step and start providing data collection services.

Konstantin Dranch (KD): Jaap, you caused quite a storm when you started offering data services, and data providers were asking themselves, “What is he doing? He’s encroaching on our territory!”

Jaap van der Meer (JM): It’s been quite a journey because everybody knows TAUS as a think tank and we wanted to change that perception in the market. It was such a deeply rooted perception… But it worked!

KD: How big is data with TAUS now?

JM: Today, 50% of the business is data sales, and 50% is data services.

KD: What triggered the change? Was it the pandemic? TAUS was big on conferences before it, and the lockdowns killed that business.

JM: It’s easy to believe so. In 2019 when we organized around 15 events, we had a record income from conferences of 1 million. In 2020 it was zero, in 2021 it was zero. But it wasn’t the pandemic, no. It started internally, back in 2018. It got harder to remain a think tank. The future is coming closer and it becomes more difficult to predict. So I said, “We’ve done the talk, let’s walk the walk”. We started to think about how to be more commercial with our data. That’s also when we got really serious about optimizing and cleaning our data repository.

Opportunity in Data

KD: Jaap, what’s in store for data business, from your perspective?

JM: A big shift to speech! Even though our marketplace today is pure text, in on-demand services, however, we collect more speech than text. There is a big interest in dialects, different voices, age groups, and ethnicity. For each language, we’re speaking about thousands of voices, and collecting data for the variety is a big thing.

KD: Do you already have recording rooms where people read conversations from scripts?

JM: Yep. And spontaneous speech, unscripted.

KD: And that sells at $300 per hour?

JM: No, not anymore.

KD: Do you believe diversity and inclusion have a part to play in creating a market for language data?

JM: Definitely. Some of our interesting projects in speech data collection cover accents from different cities, and from different ethnicities. Big tech companies now have a perspective that the conversational AI they are developing needs to speak the language of every individual, and not of a country.

KD: What about text?

JM: You would see the same in text. The language of gamers is very different from the language of medical specialists. Everybody speaks English, but it’s a different English.

The holy grail there for the big tech companies is to cover domains and especially low-resourced languages with less data, using massively multilingual models to make the model so effective that you can start machine translating any language without data, at some point. But they are not there yet, and getting there will take a lot of time. In the meanwhile, we get requests for text data and low-resource languages, asking, “Do you have data for Indic languages, Southeast Asian languages, and African languages?”; We say: “No, but we can produce them”.

KD: Competition in the data marketplaces has exploded. On the popular HuggingFace community, the iconic OPUS machine translation dataset gets downloaded hundreds more times per month than on its official website. How does TAUS fit in the new paradigm?

JM: When we started on the data marketplace project, we had no idea that marketplaces would become so popular within just a short time. Now we’re seeing advances from Snowflake, Google, Microsoft, and AWS: everybody’s inviting us to upload and publish the TAUS datasets on their platforms. And I’m saying, “Well, we have our own marketplace, you know, why would we publish our assets on other people’s marketplace?”.

KD: Does open-source data present a threat to data providers? Just in Europe, there are about 12 different institutions that obtain data and publish it regularly. Why would anyone use a marketplace when they can download it for free?

JM: Before we started the TAUS data marketplace, we looked at the Evaluations and Language Resources Distribution Agency (ELDA) in France and at the Linguistic Data Consortium (LDC) in the US. And we found out that it’s different. They store data as files, and the buyer has to do the heavy lifting. With us, a user can upload a query corpus and search the entire repository on a segment basis, retrieve matching segments, and create a training dataset. That’s not possible on any of the agencies that you mentioned, I dare to say.

KD: Why didn’t you just take all that EU data, license it, and increase your repository size by a significant amount, while providing the TAUS interface to data?

JM: Maybe that’s an idea (smiles). It would have to be a massive undertaking, I guess. But yeah,

perhaps we’ll go there.

KD: What is the most interesting next project for TAUS Data?

JM: This one I can talk about is a big partnership. We’ve come up with a new product we call the DeMT Estimate API. It’s a quality prediction and quality estimation engine.

KD: Like Comet QE.

JM: Yeah, it’s based on Comet QE. It’s using our sentence embeddings applied to machine translation’s output segment. We give a score that predicts the quality of the machine translation’s performance in that segment. Based on the score, the customer can decide, “Okay, this goes straight to the end-user, and that part needs to have some post-editing first”.

KD: Lastly, what’s your advice to our peers? Let’s speak to the four industry groups: the buyers, the localization PMs, the translators, and the machine learning people.

JM: The revolution in AI continues, and I think the awareness of the trends is still very limited. At the core of the industry, there are very smart people who definitely understand what’s going on, and they’re doing all the right things.

But the vast majority is still somewhat ignorant on the technology front, and that’s in a way dangerous for their continued tenure in the profession. But I would say to everybody: open up your perspectives. Don’t accept the baseline AI, understand your own data, and understand the power of the translation memory that you’ve built up over the past decades. How can you utilize that to preserve your sort of unique position as a specialist in this area and this language? Work on your data!

About Jaap Van Der Meer

An iconic pioneer, Jaap Van Der Meer began his career in a world that didn’t yet know about localization, before the term was coined. As a Dutch language and culture student, he started working at IBM, and by mixing computers with languages, he invented some of the world’s first terminology and translation tools. He then founded a translation company, INK, which became one of the largest in the industry, and directed Localization Industry Standards Association (LISA), responsible for interoperability in translation technology. He also was President and CEO of ALPNET Inc., which is one of the first translation companies to be listed in the NASDAQ, an important American stock exchange. Today Jaap is a regular speaker at conferences and the author of many articles about technologies, translation, and globalization trends.